When I first started writing applications that needed to handle multiple network requests, I did it the simple, synchronous way. The program would make one request, wait for the response, make the next, and so on. It was slow and inefficient because my program spent most of its time just waiting. This is a classic I/O-bound problem, and the solution in modern Python is asyncio.

Table of Contents

- 1.1 What Are the Core Concepts of Asyncio?

- 1.1.1 When to Use Asyncio: I/O-Bound vs. CPU-Bound

- 1.2 How to Get Started with a Simple Async Program

- 1.3 Working with Tasks for Background Execution

- 1.4 Essential Synchronization Primitives

- 1.5 Common Concurrency Patterns I Use

- 1.5.1 Fan-Out/Fan-In

- 1.5.2 Producer-Consumer

- 1.6 Asyncio vs. Multithreading vs. Multiprocessing: How to Choose

- 1.7 Conclusion

- 1.8 More Topics

Asynchronous programming allows a single thread to manage many tasks by switching between them whenever one is waiting for an operation (like a network call) to complete. It’s a powerful way to build fast, responsive applications that handle high levels of concurrency without the overhead of threads. In this guide, I’ll walk you through the core concepts of

asyncio, show you how to get started, and cover the essential patterns I use to write effective async code.

What Are the Core Concepts of Asyncio?

To get started, you need to understand a few key ideas that make asynchronous programming work in Python. It’s a bit of a mind-shift from regular synchronous code, but once it clicks, it’s incredibly powerful.

- Coroutines (

async def): These are the heart ofasyncio. You define one usingasync def, and it marks the function as being pausable and resumable. Calling anasyncfunction doesn’t run it right away; it returns a coroutine object that the event loop can manage. - The Event Loop: This is the engine of

asyncio. It’s a loop that runs in a single thread and manages all your asynchronous tasks. It keeps track of which tasks are ready to run and which are paused, waiting for something to happen. - The

awaitKeyword: This is how a coroutine voluntarily pauses itself and gives control back to the event loop. When your code hits anawaitexpression, the event loop can switch to running another task until the awaited operation is complete.

When to Use Asyncio: I/O-Bound vs. CPU-Bound

I can’t stress this enough: asyncio is designed for I/O-bound tasks. These are jobs where the program spends most of its time waiting for external resources, such as network requests, database queries, or file operations.

It is not a good fit for CPU-bound tasks, which involve heavy calculations that keep the processor busy. Since

asyncio runs on a single thread, a long-running CPU-bound task will block the entire event loop, preventing any other tasks from running. For those scenarios, you should use the multiprocessing module instead.

How to Get Started with a Simple Async Program

The easiest way to run a coroutine is with asyncio.run(). This function handles starting and stopping the event loop for you and is the standard entry point for an

asyncio application since Python 3.7.

Let’s look at a practical example: fetching several web pages concurrently using the popular aiohttp library.

Python

import asyncio

import aiohttp

import time

async def fetch_url(session, url):

print(f"Fetching {url}")

async with session.get(url) as response:

# The 'await' here pauses this coroutine while waiting for the response

data = await response.text()

return data[:50] # Return first 50 characters

async def main():

urls = [

"http://example.com",

"http://httpbin.org/delay/1",

"http://example.org"

]

start_time = time.time()

async with aiohttp.ClientSession() as session:

# Create a list of coroutines to run

coros = [fetch_url(session, url) for url in urls]

# Run them all concurrently and wait for the results

results = await asyncio.gather(*coros)

duration = time.time() - start_time

for url, result in zip(urls, results):

print(f"{url}: {result}...")

print(f"Fetched {len(urls)} URLs in {duration:.2f} seconds")

if __name__ == "__main__":

asyncio.run(main())

In my experience, this code demonstrates the magic of asyncio perfectly. Instead of taking over 3 seconds (1 second for each site, plus the delay), it finishes in just over 1 second because the waiting times for the network requests are overlapped.

Working with Tasks for Background Execution

While asyncio.gather is great for running a batch of coroutines and waiting for them all, sometimes you want to start a task in the background and continue with other work. For this, you use asyncio.create_task().

This function schedules a coroutine to run on the event loop immediately and returns a

Task object. You can think of it as “fire and forget,” though you can (and should)

await the task object later to get its result or see if it raised an exception.

Python

async def work(task_id):

print(f"Task {task_id}: starting")

await asyncio.sleep(2)

print(f"Task {task_id}: finished")

return f"result{task_id}"

async def main():

# Start two tasks in the background

task1 = asyncio.create_task(work(1))

task2 = asyncio.create_task(work(2))

print("Tasks created, now waiting for results...")

# Wait for both tasks to complete

results = await asyncio.gather(task1, task2)

print("Both tasks done, results:", results)

asyncio.run(main())

You can also cancel a task using its .cancel() method. This will raise a

CancelledError inside the task at the next await point, allowing it to perform cleanup before exiting. Proper

error handling is key to making robust async applications.

Essential Synchronization Primitives

Even though asyncio runs on a single thread, you still sometimes need to coordinate tasks or protect shared resources. asyncio provides asynchronous versions of common synchronization tools.

- Lock: Ensures that only one coroutine can access a critical section of code at a time. I use

async with lock:to make sure a shared variable is updated safely without race conditions. - Semaphore: Similar to a lock, but it allows a limited number of coroutines to enter a section at once. This is perfect for rate-limiting API calls or limiting concurrent access to a resource pool.

- Event: A simple flag that one coroutine can set to signal other waiting coroutines to proceed. I find this useful for managing startup sequences where worker tasks need to wait for a configuration to be loaded.

Common Concurrency Patterns I Use

Over time, you’ll notice a few patterns appearing again and again in asyncio code.

Fan-Out/Fan-In

This is the pattern we saw in the first aiohttp example. You “fan-out” by creating many concurrent tasks and then “fan-in” by using

asyncio.gather() to wait for them all to complete and collect their results. It’s the go-to pattern for parallelizing independent I/O operations.

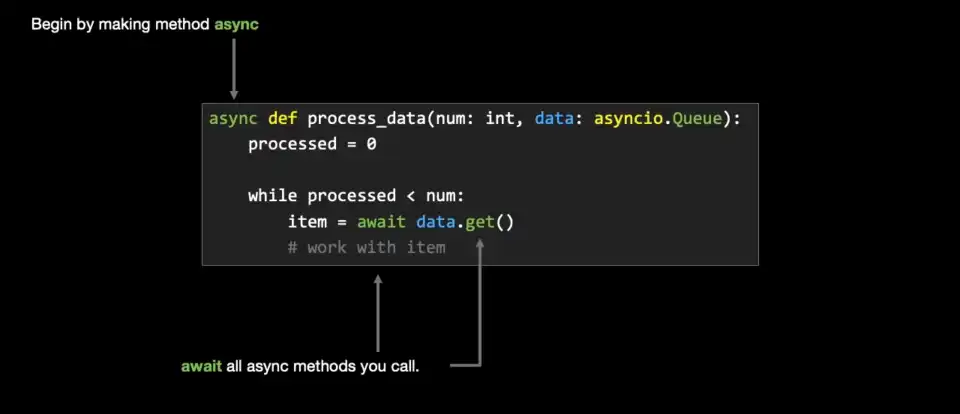

Producer-Consumer

This pattern decouples the task of generating work from the task of doing the work. Producers add items to an

asyncio.Queue, and consumers pull items from the queue to process them. The queue handles the synchronization, so producers can add items without waiting for consumers, and consumers can wait for items to become available.

Asyncio vs. Multithreading vs. Multiprocessing: How to Choose

Choosing the right concurrency model is crucial. Here’s a quick breakdown of how I decide:

| Model | Best For | GIL Impact | Parallelism | Key Benefit |

| Asyncio | I/O-bound, high concurrency | GIL applies, runs on 1 core | No (Concurrent) | Very efficient for thousands of waiting tasks. |

| Multithreading | I/O-bound | GIL limits to 1 core at a time | No (Concurrent) | Good for overlapping I/O in legacy code. |

| Multiprocessing | CPU-bound | Bypasses GIL | Yes (True Parallelism) | Uses all available CPU cores for heavy computation. |

In many complex applications, I’ve found a hybrid approach works best: using asyncio as the main I/O handler and offloading heavy CPU-bound tasks to a process pool from the concurrent.futures module.

Conclusion

asyncio is a powerful tool for building high-performance I/O-bound applications in Python. By leveraging coroutines, the event loop, and the async/await syntax, you can handle thousands of concurrent operations with minimal overhead. While it requires a different way of thinking compared to synchronous code, mastering patterns like fan-out/fan-in and producer-consumer will allow you to write incredibly efficient and scalable systems.

More Topics

- Python’s Itertools Module – How to Loop More Efficiently

- Python Multithreading – How to Handle Concurrent Tasks

- Python Multiprocessing – How to Use Multiple CPU Cores

- Python Data Serialization – How to Store and Transmit Your Data

- Python Context Managers – How to Handle Resources Like a Pro

- Python Project Guide: NumPy & SciPy: For Science!

- Python Project Guide: Times, Dates & Numbers