When you’re working on a Python application, your data—whether it’s user settings, application state, or the results of a long computation—exists only in memory. Once the program closes, it’s all gone. That’s where data serialization and persistence come in; these are the essential techniques I use to save data for later, transmit it across a network, or share it between different systems.

Table of Contents

- 1.1 JSON: The Universal Language of Data

- 1.1.1 Converting Python Objects to JSON Strings (dumps)

- 1.1.2 Parsing JSON Strings into Python Objects (loads)

- 1.2 Pickle: For Python-Exclusive, Complex Objects

- 1.2.1 A Critical Security Warning

- 1.2.2 Using dump and load

- 1.3 How I Choose: Pickle vs. JSON

- 1.4 Shelve: A Persistent Dictionary

- 1.5 Other Useful Formats

- 1.6 Conclusion

- 1.7 More Topics

Serialization is the process of converting a Python object into a format (like a string or a byte stream) that can be stored or transmitted. Persistence simply means ensuring that data survives after the program that created it has finished running. In this guide, I’ll walk you through the most common tools Python offers for this, explaining how I choose the right one for the job.

JSON: The Universal Language of Data

JSON (JavaScript Object Notation) is my default choice for most data exchange tasks, especially in web development. It’s a lightweight, text-based format that’s incredibly easy for both humans and machines to read. Python’s built-in json module makes working with it a breeze.

Converting Python Objects to JSON Strings (dumps)

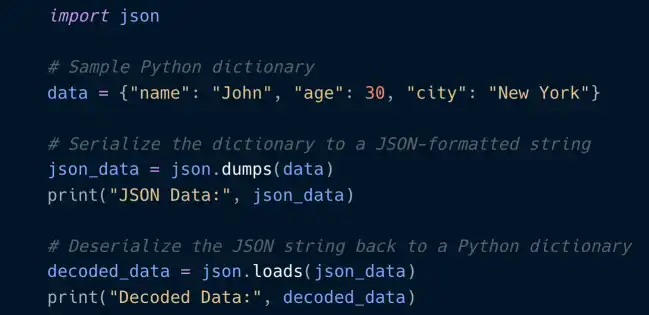

To serialize a Python object (like a dictionary or a list) into a JSON-formatted string, I use json.dumps(). This function has a lot of useful parameters, but the ones I use most often are indent for pretty-printing and default for handling objects that JSON doesn’t know how to serialize.

For example, this function from the LangChain library uses indent=2 to create a nicely formatted, human-readable JSON string.

Python

# File: libs/core/langchain_core/load/dump.py

def dumps(obj: Any, *, pretty: bool = False, **kwargs: Any) -> str:

"""Return a json string representation of an object."""

try:

if pretty:

indent = kwargs.pop("indent", 2)

return json.dumps(obj, default=default, indent=indent, **kwargs)

else:

return json.dumps(obj, default=default, **kwargs)

except TypeError:

# ... fallback logic ...

Parsing JSON Strings into Python Objects (loads)

To do the reverse—deserialize a JSON string back into a Python object—I use json.loads(). This function is powerful because you can pass it a custom function (an object_hook) to intercept the decoding process. This is perfect for transforming JSON objects into custom Python class instances.

The Apache Superset project uses an object_hook called decode_dashboards to reconstruct Dashboard, Slice, and other custom objects directly from the JSON content.

Python

# File: superset/commands/dashboard/importers/v0.py

def decode_dashboards(o: dict[str, Any]) -> Any:

"""Recreates the dashboard object from a json representation."""

if "__Dashboard__" in o:

return Dashboard(**o["__Dashboard__"])

if "__Slice__" in o:

return Slice(**o["__Slice__"])

# ... more custom object checks ...

return o

def import_dashboards(content: str, ...) -> None:

data = json.loads(content, object_hook=decode_dashboards)

For tasks involving reading and writing files in Python, the json module also provides json.dump() and json.load(), which work directly with file-like objects instead of strings.

Pickle: For Python-Exclusive, Complex Objects

When I need to serialize complex, Python-specific objects—like custom class instances with all their methods intact—I turn to the pickle module. Pickling converts a Python object into a byte stream, and unpickling reverses the process. It can handle a much wider range of Python types than JSON can.

A Critical Security Warning

Before going further, I have to stress this point: The pickle module is not secure. You should only ever unpickle data that you absolutely trust. A maliciously crafted pickle file can execute arbitrary code on your machine during unpickling, which is a major security risk.

Using dump and load

Similar to the json module, pickle has dump() to write a serialized object to a file and load() to read it back.

In this example from the Scrapy web-crawling framework, response metadata is saved to a file named pickled_meta using pickle.dump() for caching. The _read_meta function later uses pickle.load() to retrieve that cached data, avoiding the need to re-fetch the web page.

Python

# File: scrapy/extensions/httpcache.py

class FilesystemCacheStorage:

def store_response(self, spider: Spider, request: Request, response: Response) -> None:

"""Store the given response in the cache."""

metadata = {

"url": request.url,

"method": request.method,

"status": response.status,

# ... more metadata

}

with self._open(rpath / "pickled_meta", "wb") as f:

pickle.dump(metadata, f, protocol=4)

def _read_meta(self, spider: Spider, request: Request) -> Optional[Dict[str, Any]]:

metapath = rpath / "pickled_meta"

# ... checks for expiration ...

with self._open(metapath, "rb") as f:

return cast(Dict[str, Any], pickle.load(f))

How I Choose: Pickle vs. JSON

Deciding between Pickle and JSON is a common task. Here’s the thought process I go through:

- Who needs to read this data? If the data needs to be read by another language (like JavaScript in a browser) or easily inspected by a human, I always use JSON. If it’s only for internal Python processes, Pickle is an option.

- What kind of data am I storing? For simple data structures like lists and dictionaries, JSON is perfect. For complex custom classes or objects that JSON can’t handle, Pickle is the more powerful choice.

- Is the data source trusted? This is the most important question. If the data comes from an external or untrusted source, I never use Pickle due to the security risks. JSON is much safer in this regard.

Shelve: A Persistent Dictionary

Sometimes I just need a simple, persistent key-value store without setting up a full database. The shelve module is perfect for this. It provides a dictionary-like interface, but the data is saved to a file, making it persistent across sessions.

Under the hood, shelve uses pickle to serialize the objects, so you get the benefit of storing complex types with the convenience of dictionary-style access.

Using it is straightforward, especially with a with statement to ensure the shelf is closed properly.

Python

import shelve

# Open a shelve file (creates it if it doesn't exist)

with shelve.open("my_data_store.db") as db:

# Store data like a dictionary

db["config_settings"] = {"theme": "dark", "retries": 3}

db["user_list"] = ["Alice", "Bob"]

# Later, in the same or another script...

with shelve.open("my_data_store.db") as db:

config = db["config_settings"]

print(config) # Output: {'theme': 'dark', 'retries': 3}

Other Useful Formats

While JSON and Pickle cover most of my needs, Python has built-in support for other formats worth knowing.

- CSV: For handling tabular data, like spreadsheets, the

csvmodule is indispensable. I find thecsv.DictReaderandcsv.DictWriterclasses particularly useful because they let you work with rows as dictionaries, using the header row for keys. - XML: Though less common now than JSON for APIs, XML is still prevalent in configuration and enterprise systems. The

xml.etree.ElementTreemodule provides a simple and efficient way to parse and create XML documents. - Marshal: You might come across the

marshalmodule, but I’d advise against using it for general data persistence. It’s used internally by Python for reading and writing.pycfiles, and its format is not guaranteed to be compatible between different Python versions.

Conclusion

Choosing the right serialization format is key to building robust and scalable applications, especially when you venture into fields like data science and machine learning. My general rule of thumb is to start with JSON for its interoperability and safety. If I’m working exclusively within a trusted Python ecosystem and need to serialize complex objects, I’ll use Pickle. For simple key-value persistence, Shelve is a great tool to have in your back pocket.

More Topics

- Python’s Itertools Module – How to Loop More Efficiently

- Python Multithreading – How to Handle Concurrent Tasks

- Python Multiprocessing – How to Use Multiple CPU Cores

- Python Asyncio – How to Write Concurrent Code

- Python Context Managers – How to Handle Resources Like a Pro

- Python Project Guide: NumPy & SciPy: For Science!

- Python Project Guide: Times, Dates & Numbers